The rapid buildout of the largest data center project in history is hard to keep up with.

In the course of conversations over several months with OpenAI staffers about the trillion-dollar Stargate project, the scope and scale of the endeavor have morphed, growing ever larger and more expensive.

“So much changes every day,” the director of physical infrastructure Keith Heyde tells DCD in an interview crammed between project calls. “The top-level goals still remain the same. I think the execution strategy on how to get to those goals is what is dynamic. Which partners we’re leaning into more, which ones we’re trying to make sure we’re able to couple with tightly, is constantly in flux.

“We have an exceptional and awe-inspiring amount of compute demand that’s been asked to be delivered.”

A version of this feature appeared in Issue 57 of the DCD Magazine, but has been expanded with more interviews and details on the latest deals and announcements

A bet on scaling laws

Stargate initially began as a thought experiment. The current view on training multimodal large language models (MLLMs) is that the more compute you put in (along with ever more data), the better the model. To keep up its breakneck pace in software, therefore, OpenAI needed a similarly aggressive approach to hardware.

“We have a very strong conviction about scaling laws,” Chris Malone, OpenAI’s head of data centers, says. “We’ve seen remarkable improvements in the performance of the models over time, and it seems like that will continue to scale. Now, unfortunately, we’re in the way [of that progressing] on the data center side as we’re short on capacity constantly. One of our main goals is to unlock that.”

The result of this thought process was to conceptualize an extremely large data center, akin in capacity to the entire Virginia data center market – currently home to the world’s largest collection of data centers – in 2025.

OpenAI had initially hoped to get Microsoft, at the time its exclusive cloud provider and biggest backer, on board with the $100bn 5GW mega data center. But talks fell apart in 2024, with Microsoft increasingly cautious about how much it was spending on a growing rival in an unproven sector, and OpenAI similarly growing frustrated with the hyperscaler’s comparatively slow pace.

The election of Donald Trump provided an opportunity for Stargate to be reborn. Emboldened by a president promising to cut red tape, and keen to stay ahead of rival – and then-presidential confidante – Elon Musk, OpenAI announced that Stargate would be a $500 billion project to build a number of large data center campuses across the US over four years.

The end result is a Stargate that is markedly different from the original single-facility vision. Its breadth is far broader, its scope more ambitious – but, for now, the individual data centers are smaller. And yet, they could still be larger than any currently in the market.

At the same time, much about Stargate remains ill-defined. This is partially intentional, as the small and scrappy team looks to develop the strategy in the years to come. But it’s also due to the speed of announcements, some of which are not yet backed with complete funds or contract details, but are tied to political moments – be it Trump’s inauguration or his visit to the Middle East.

Much speculation has swirled about how much money Stargate actually has. Soon after it was announced, Sam Altman told colleagues that OpenAI and SoftBank would both invest $19 billion into the venture, The Information reported at the time. Oracle and Abu Dhabi’s MGX are believed to be chipping in a combined $7bn.

SoftBank is also in talks with debt markets about additional funding and has itself invested in OpenAI (giving it enough cash to cover its initial investment in Stargate). OpenAI will likely seek to go public during Stargate’s rise to help foot the expanding bill.

Altman has since said that Stargate and OpenAI’s cloud commitments total some $1.4 trillion, a truly unprecedented level of compute spend, and significantly more than the company can currently afford.

Individual Stargate projects are themselves an intricate web of investments. The furthest along site in Abilene, Texas, has seen developer Crusoe secure $15 billion in debt and equity, with the funds managed by Blue Owl’s Real Assets platform, along with Primary Digital Infrastructure. Most of that funding came from JPMorgan through two loans totaling $9.6bn.

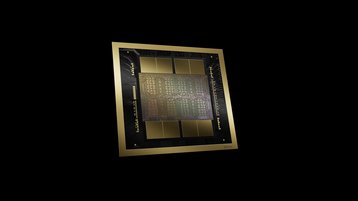

Oracle is currently in talks to spend as much as $40bn on 400,000 Nvidia GB200 GPUs for the site, per the Financial Times, which it would then lease to OpenAI.

Heyde, who joined this time last year after four years at Meta, is less concerned about the nitty-gritty of the accounting. “There’s sort of a money flywheel that I don’t bother with,” he says. “That’s a related team. I try to assume that the money flywheel will provide what I need.

“I think about the power/land flywheel, which is the lowest step. Then I think about the shell flywheel. And then I think about the IT flywheel.”

It begins in Texas

Abilene is the first of the Stargates in the US, with OpenAI essentially piggybacking on an existing proposed megaproject. The site is, like everything else involved with Stargate, a complex matryoshka doll of interlinked interests.

At the base is the Lancium Clean Campus, owned by Blackstone-backed Lancium, a crypto miner-turned AI data center developer that this October raised $600m in debt led by Santander Corporate & Investment Banking. The company leased its site to Crusoe Energy, another crypto pivoter, which then began work on data centers that were originally set to be leased to Oracle.

Back in 2024, the initial plan was for Crusoe to build 200MW for Oracle. The cloud provider, which was falling ever further behind the hyperscalers in the pre-generative AI era but has since spent billions on large projects to catch up, was then in talks with Musk to use it for his AI venture, xAI. But, when he pulled out in favor of building his own site in Memphis, OpenAI swooped in.

At the time, due to the exclusivity contract between OpenAI and Microsoft, the deal had one added layer of complexity – Oracle would lease the 200MW to Microsoft, which would then provide it to OpenAI. With Stargate, DCD understands that the extra step has been removed.

Also with Stargate, the ambitions have been ramped up: Crusoe is now planning on building out the entire 1.2GW capacity of the Lancium campus. However, in a nod to the speed and chaos of the early days of Stargate, DCD understands that Crusoe executives at the PTC conference in Hawaii this January were just as surprised to learn about the project being greenlit as the rest of the world.

“The data halls at Abilene are like 30MW,” Heyde reveals. “But then the total site for us could go above a gig if we expand it as far as it could go.”

Construction of the first phase, featuring two buildings and more than 200MW, began in June 2024 and was energized this September.

The second phase, consisting of six more buildings and another gigawatt of capacity, began construction in March 2025 and is expected to be energized in mid-2026. One substation has been built, with another on the way – power is also provided by on-site gas generators. Oracle has agreed to lease the site for 15 years.

“It’s us and Oracle,” Heyde explains. “And then you have your tech stuff that’s going to Foxconn, and your shell stuff that’s going to Crusoe. And then under Crusoe, there’s [construction contractor] DPR, and there’s all the subcontractors like Rosendin on the site.”

With the first two buildings about to be ready, Heyde has been making regular trips to the campus. “My team was there yesterday, I was there a couple of weeks ago,” he tells us. “It’s more about the rack readiness than necessarily base readiness. As we get these GB200 racks off the line, that’s the name of the game right now.”

Roughly three hours’ drive from Abilene, another OpenAI project is taking shape. Core Scientific is refurbishing its Bitcoin mining data center in the location just north of Dallas, instead pitching it as an AI data center hotspot.

That site is then leased to CoreWeave, which is working with OpenAI. “Our interactions are with CoreWeave,” Heyde says. OpenAI plans to spend $22.4 billion on services from the neocloud, although it is unclear if all of it will go through Stargate.

In the same month the first halls at Abilene went live, OpenAI significantly expanded its contract with Oracle to a record $300 billion over five years, which includes 4.5GW of capacity beyond the original site.

It has since announced plans for five more US-based Stargates, including a 1GW Michigan data center developed by Related Digital and a large facility in Wisconsin from Vantage. Others are planned in New Mexico and Texas, all of which are being built and operated in partnership with Oracle.

The New Mexico facility is backed by $3 billion from Blue Owl, The Information reports, while as much as $18 billion could come in in loans led by Sumitomo Mitsui, BNP Paribas, Goldman Sachs, and Mitsubishi UFJ Financial. That facility alone could grow to 4.5GW at full buildout.

OpenAI has also signed a cloud contract with Google, which includes the use of its TPUs, and a $38bn multi-year cloud deal with AWS. As for Microsoft, its one-time exclusive compute provider, OpenAI this October finalized its for-profit shift and dropped Microsoft’s cloud first-right-of-refusal.

At the same time, it agreed to a $250bn Azure contract over an undisclosed time period. In leaked documents sent to Ed Zitron, OpenAI disclosed that it spent $5.02 billion on inference alone with Azure in the first half of 2025, up from $3.767bn for all of 2024.

Some of these cloud contracts may themselves then be farmed out to CoreWeave data centers, complicating an exact accounting of the scale of investment.

A further layer of complexity comes from OpenAI’s deals with silicon providers, which have helped fund its rise both to maintain market growth and to keep it as a customer.

The silicon supports

Most notably, following OpenAI’s use of Google TPUs, Nvidia announced plans to invest as much as $100bn into the company, which OpenAI will then spend on 10GW of Nvidia-based AI data centers.

Soon after, AMD announced it would supply OpenAI with 6GW of compute, in return for allowing the company to buy 160 million shares (10 percent of AMD) at just one cent apiece.

This was followed by Broadcom’s promise to deploy 10GW of its custom AI chips for OpenAI by 2029. The companies are co-developing AI accelerators, with OpenAI’s efforts led by former TPU lead Richard Ho.

Should all of this come to pass, it will mean that OpenAI will have to field a much broader variety of compute types than its mostly-Nvidia Hopper and Blackwell fleet of today.

“I only see the number of chip types being much broader,” Joel Morris, OpenAI’s head of compute planning, tells DCD. “So this is going to be an interesting space to solve for.

“We could realize that, for some workloads, Blackwells are really good, and we want to keep continuously scaling on Blackwells, while for other workloads, we might have to find a different avenue and then find a way to optimize.”

Morris’ job is to ensure that there is enough compute, of the right type and in the right location, for the workloads of today and tomorrow. “If GPT works flawlessly for you, I’m the good guy. If not, I’m the bad guy,” he jokes.

“When it comes to AI forecasting, you have this queue of Hoppers and Blackwells and different type of SKUs that are available to serve inference. And then you have the workloads: A multi-modal workload is very different from a regular chat workload. One might need more memory, and the other might not.

“Then there are regional requirements. You might have to have reliability taken into account, and then the performance of a Blackwell within different products might also not be the same. So it’s a multi-dimensional allocation and optimization problem, which you consistently keep learning from. Sometimes you have to make a decision to serve Blackwell for a very specific type of demand and then find a way to make it more efficient for the other product type.”

In some cases, some AI models simply do not run without enough compute. “Some have a minimum number of nodes of GPU deployed to run, so we keep playing around with what’s coming in, what’s changing, to keep managing it across how we plan to deploy data centers.”

Morris’ team is outside of the Stargate effort, but feeds into it. “There’s definitely a feedback loop that is consistently going around that keeps saying, ‘hey, the demand seems to be this way,’ or ‘this is what the consumer preference looks like, and also how the model evolution looks like.’”

Similarly, the Stargate team has to keep in close communication with compute planners, as well as the core research team. “A really fun part of our job over the summer has been working with the research teams and working with the software teams to decompose their workload needs into those physical rules at the bare minimum,” Heyde says.

“Then we can think about the fastest deployment strategies for that. That latency item that gets talked about, directly correlates to network length, which ties directly into how we think about our building footprint and our campus footprint.”

Stargate goes global

Outside the US, Stargate is pushing forward with multiple projects. In the United Arab Emirates, OpenAI has turned to Oracle, Cisco, Nvidia, and SoftBank to develop a facility supporting up to 5GW in a project led by G42.

The chair and controlling shareholder of G42, Tahnoun bin Zayed Al Nahyan, is the brother of the UAE president. Tahnoun is also the nation’s national security adviser and chair of Stargate-backer MGX.

In Europe, Nscale and Aker are developing a Stargate Norway project with around 100,000 GPUs, while Nscale is also deploying a smaller 8k GPU Stargate development in the UK, potentially spread across multiple sites.

Less clear are the other locations OpenAI is evaluating. A 500MW facility has been promised for Argentina, but the project does not appear to have left the letter of intent stage. Similarly, the company has said it is considering developing in Canada.

OpenAI has signed an MoU with SK Telecom to develop an AI data center in the southwest region of South Korea, while OpenAI execs have been spotted touring Asia to scout potential sites in India, Japan, and elsewhere.

Many of the companies involved in these projects are deeply interconnected – with Oracle, SoftBank, and MGX (through G42) funding the overall venture, and some of the individual facilities.

Nvidia, at the same time as investing in OpenAI, has invested in many of the neoclouds that make Stargate’s rapid expansion possible. The company has backed both CoreWeave and Nscale, to name but a few of its vast portfolio, and is thought to be considering backstopping OpenAI’s data center loans. How much of Nvidia’s previously announced investments are rolled into the $100bn/10GW deal is not clear.

“We wouldn’t be able to be where we are today without the partnerships across all these spaces with the partners that exist today, including Oracle and many others who have helped us get this far,” Heyde says.

“I think understanding which data centers we need to develop fully in-house versus which ones we partner on is the core art behind the science here.”

The perfect size

With OpenAI planting its flag in Abilene as the first of its Stargates, other developers have flocked to the region to acquire land and attempt to source power, in the hope of experiencing the network effect seen in pre-generative AI data center booms.

“I think that anytime you have some clustering of a certain skill set in an area, you do create a center of excellence,” Heyde says, but admits he’s uncertain if the same network effects will be found.

“From a tech advantage standpoint, we don’t necessarily think that that is super advantageous. Because, ultimately, your back-end network distance is like the size of the cluster. So there’s an argument of ‘it doesn’t matter if you’re 15 or you’re 600 [kilometers] away.’”

This network limit weighs heavily on Heyde’s mind. “The sweet spot for a training site is between 1GW and 2GW,” he says. “Above 2GW, things start getting a little silly because you have back-end network distances that are a little bit too long. They’re too far to do what you need to do.”

At that point, some racks are so far away from other racks that the data center might as well be spread out.

“Even if you have the perfect flat piece of land, the trickiest part of design is your networking distance,” he says. “If you’re gonna do a single-story data center, at a certain level, the networking distance becomes too big to have a massive, massive cluster there.”

One could move to multi-story to pack the racks closer, of course. “The challenge with multi-story is that a) There’s an operation pain in the ass that comes with it; but b) there’s also a little bit of logistics variability on when we start getting into these really big racks in the future,” he says. “If we’re talking about racks that are, like, 6,000-7,000 pounds, you start going into real funky building designs.”

Such a multi-story approach was announced this week by Microsoft, which launched its Atlanta data center based on its new Fairwater design. The company explicitly said it built the site as a two-story building to reduce the distance between racks. Frustratingly, however, the company refused multiple requests from DCD to disclose the actual size of the data center.

While a 5GW mega data center campus may not make sense, several 1-2GW campuses can still work together. “What we found is that the distance constraints that were originally considered about a year ago have somewhat relaxed, based on approaches we’re using for large-scale, multi-campus training.

“So we’re not necessarily looking at tight clusters associated with latency. Rather, we’re looking at clusters positioned, probably more at a country level, that can coordinate across as long as you have enough bandwidth.”

Google has already publicly revealed that it trained Gemini 1 Ultra across multiple data center clusters, while OpenAI is believed to be developing synchronous and asynchronous training methods for its future models. With Fairwater, Microsoft said that it plans to connect all its upcoming clusters over an AI Wide Area Network.

While some hyperscalers have dug direct fiber connections between distinct campuses, Heyde says the company isn’t currently considering it. “We’ve had some internal conversations; I’ve got to make sure we get some data center campuses first.”

Including Texas, OpenAI is considering developing as many as ten projects in the US, with the company saying in February it was looking at 16 states. “Candidly, I think we’re a bit higher than that right now,” Heyde says.

When we asked Heyde to rattle off what he’s looking for with those sites during a keynote discussion at this year’s DCD>New York event, he told us: “As we’re doing these multi-campus training runs, access to large bandwidth fiber is pretty critical.”

Powering Stargate

As for power, OpenAI is evaluating both “the substation readiness and the associated data center side, but then also the total power of that location,” Heyde says.

He continues: “I think one of the interesting things we’ve seen from the RFP process is that people frame their power availability very differently, depending on whether they’re talking about it from the perspective of a utility, a power provider, a data center operator, or a data center builder. The time horizon that people pin to that power varies quite a bit based on assumptions baked in.”

Malone concurs, noting that the company is “thinking about different ways of delivering the power that we need” with behind-the-meter projects “but also on the longer-term horizon, base load solutions that are carbon-free, like SMRs and fusion, that have to be part of our long-term solution.”

Even once you find power from the grid, a concern for utilities has been the massive power swings that a single coordinated cluster can cause. The company, along with Nvidia and Microsoft, published the paper ‘Power Stabilization for AI Training Datacenters’ this August, aimed at looking at how to minimize the problem.

“Just foundationally, we cannot burden our utility grid with something like that,” Malone says. “Connecting to the grid is hugely beneficial to us, so we have a number of methods for addressing those power swings. Be it on chip management, power management solutions, or using UPS to absorb those power swings.

“Over time, we’re going to see more and more developments in the area of capacitors that are closer to that chip load, so we can essentially use a very fast-reacting battery to absorb those swings versus managing them on the chip. The goal is to unlock as much performance out of the system as we possibly can. Moving that isolation solution to the capacitors or to the UPS is really what we need to do.”

Rival xAI has solved some of the issue with its Colossus site by pushing everything through a large deployment of Tesla Megapacks, likely made more affordable by Musk’s control over both businesses. “That’s a super interesting approach to the problem,” Malone says of the move.

“I don’t think there’s a definitive answer on what the right solution is yet, and it probably depends on the attributes of the workload and the scale. But I think storage, as close as possible to the hardware, is an extremely useful approach to the problem. In the intervening time period, we’ve got tools on the silicon to help manage this.”

Power availability has also been a hot-button issue for local communities, with conflicting reports on the impact of AI facilities on local electricity prices, something that has helped lead to a wave of anti-data center sentiment, and stymied some projects.

In the case of Stargate Wisconsin, codenamed Lighthouse, developer Vantage has pledged to deploy net-new solar, wind, and battery storage, and give excess power to Wisconsin customers. It also said it would underwrite 100 percent of the power infrastructure investment required by the utility.

Similarly, for the Michigan project, known as The Barn, Related Digital will fund a new battery storage investment.

Power, however, is just one of the challenges projects of this scale face.

“If we’re building at many gigawatts of scale per year, what are the bottlenecks that show up right after power?” Malone asks. “Certainly, there’s craft labor. How do we apply design principles to improve the efficiency on the construction side? We know that there’s a finite number of human resources that are in the field, at this site [Abilene], for instance, there are just tons of people, right? So how do we actually scale the human component of the build as well?”

Then there’s land, but “the cool thing for us is that our physical footprint is a bit smaller based on the densification,” Heyde says. “And then the last thing that I would say is really critical is that we want to go places where people want us to be.”

A new type of data center

When it does go to those places, it could do it via partners – be it someone like Oracle, Crusoe, CoreWeave, or Core Scientific. Or it could build its own sites completely.

“Stargate and OpenAI both have a self-build interest in mind, yeah,” Heyde says.

“It will definitely be a novel design, comparatively. The Abilene design was really anchored around something else, and then it got repurposed into the GB200 approach. We’re working on it, and a self-build approach will be anchored around how we would go after a large training regime in the future. There are some exciting things you can do from a redundancy and risk tolerance perspective that you probably wouldn’t do at a cloud site.”

In a different conversation, he tells us: “We’re designing around, effectively, a three-nines perspective rather than a five-nines perspective.” That is, building to an expectation of 99.9 percent uptime rather than 99.999 percent. “This gives us a lot of both design flexibility, but also certain trade-offs we can make in the power stack as well, as in the site selection trade-offs as well.

“Because our design is relatively unique, and because it is centered around these three-nine training workloads, we have a little bit of flexibility to play in different ways with different players that I think you might not see in more of an established reference design that gets punched out.”

Here, Heyde is talking about training sites, or as he likes to call them, “‘research-enabling clusters,’ principally built for “pushing our capabilities on the model side forward.” This, he says, is different from the training versus inference binary that was originally seen as the two distinct AI workloads of the future.

“The means and mechanisms by which we advance our models have evolved as the industry has moved in different directions. So at this point, from the reasoning models, we’re able to push the capabilities of our software forward using things that are slightly different than classical training,” with models always in some form of training and inference.

At the same time, however, Stargate does plan to have more inference-focused compute, a shift from the initial vision of a single mega-cluster for training. This inference is alongside an ongoing extensive relationship with Microsoft Azure.

Some of the inference could take the form of aging training clusters in the years to come. As the bleeding-edge Nvidia GPUs get older, they could shift to servicing user demand, we suggest. “I think that is not a bad approach,” Heyde says. “I think there’s merit to that.”

The company is also looking to build specific inference clusters. “We could do an inference and a consumer use case that’s quite a bit smaller [than the 1GW campuses],” he says. “In fact, this is in the market now, but my team is looking at some smaller sites on how we would deploy – I don’t want to call them micro sites, they’re still pretty big – an inference or customer-facing site rapidly.”

He adds: “It is probably still too dynamic to truly say [what size they will be], but I can say that as we project out in the future, that part of our demand definitely increases. That is the customer-facing part of our demand. And so it’s not just from increasing customer engagement, but it’s also from more and more workloads being able to move into these more efficient sites – they’re not really Edge sites, but they’re more like Edge site regimes. You just have more demand that gets channeled there.”

Some customers require dedicated compute with dedicated service level agreements (SLAs). “We have to meet the SLA. We actually have very strict latency targets,” OpenAI’s Morris says. “We never want to want the consumer, be it an individual user or an enterprise, having latency issues, so we always try to balance it.”

Depending on the model, however, generally latency demands are “looser than people think,” Heyde says.

We talk the night after OpenAI reveals it is set to buy Io, the company led by Apple design guru Jony Ive, with a plan to develop consumer products. The company has yet to reveal any concrete details about the devices, but an announcement video references an always-on, always-listening system to allow ChatGPT to be involved at any moment.

Such a system, should it prove popular, would require even more compute and storage.

Heyde isn’t involved in the project, but says of the data center side: “The numbers that Sam [Altman, OpenAI CEO] shared with us earlier today, were like ‘add an extra zero’ type stuff. It seems like I’ve never had a demand break, including when DeepSeek was really shaking the market.”

Uncertainty vs inevitability

Another market shock has been the US President’s war on the global economy, with sudden and drastic tariffs levied against virtually the entire world (tariffs are paid by the importer, not the exporter). Some of those tariffs have been walked back, some have been ramped up. At time of writing, the current state of tariffs is too fluid to make writing the individual percentages worthwhile.

TD Cowen analysts warned that tariffs could add five-15 percent costs to Stargate data center builds, an enormous cost given the scale of the joint venture. “This is an interesting one,” Heyde says of the tariffs.

“A lot of the sourcing decisions that I was making were already North America-based. So the nice thing was that I didn’t really see major tariff problems from that, [but] I’ve seen other projects that we were looking at that had major, major disruptions, though. The volatility is half of the challenge.”

For now, development is continuing unabated. Speed remains the single greatest priority, with OpenAI locked in a race for a prize it believes easily outweighs any momentary cost increases.

“In some ways, that drives us to a little bit of short-termism on our decisions,” Heyde admits. “We want to really be unlocking training. I actually think we’re okay with a little bit of cost inefficiency – I want to say that delicately – to enable good training moments.”

A desire for speed, together with a large wallet, does come with its own challenges, and the company is inundated with development offers, most of which are unsuitable.

“Imagine you’re at a restaurant, and there are just so many choices on the menu, and it’s time for us to make sure we pick the ten choices we want, not the 100 choices that are at our disposal,” Heyde says.

Of those ten choices, “we’re trying to think about two cycles out of our data center design, you don’t just get one and done,” Heyde says.

“It’s hard when we’re talking about these power densities and it’s also hard for me to not look at the lay of the land and be like, ‘are racks going to be our form factor in eight years?’ The whole rack form factor might change because of the power densities we’re talking about.”

He says he can see data centers of the future containing what is “effectively liquid, reconfigurable, compute.” By this, Heyde doesn’t mean immersion cooling systems, but “dynamic liquid conduits that can reconfigure as part of the data center compute infrastructure.”

Similarly, Malone has visions of radically different data centers of tomorrow.

“We all talk about gigawatt-scale computing now, as if it’s just a thing,” he says. “But it’s really unprecedented. We’re thinking about how we scale differently. If you look at the way we built data centers 15 years ago, when 30MW was a massive amount, oftentimes what we’ve done to build the 300MW data center is just do 10 times as much of the same thing.”

For the next scale-up, OpenAI doesn’t want to just times it by ten again. “How can we think about larger components, more integration, more manufacturing-centric kind of practices to actually deliver at that incredible scale.”

Echoing Heyde’s comments, he adds: “We’re thinking about the size of the components that we put into these systems and how that needs to change, and thinking about it right down to the rack.

“A 19-inch cabinet, it’s been around for many, many years, and I don’t think that that needs to be so, right? If we’re thinking about speed, if we’re thinking about a useful lifetime for a cluster measured in single-digit years, why not deliver the capacity in a way that allows for quick changes or quick deployment, at some sacrifice that we currently may not be able to comprehend?”

Another issue with current approaches is that “data centers are designed around human constraints,” Malone says. “So can we think differently about temperature and height and weight? We’re talking about one megawatt racks in some hypothetical, not so far out future, those are extremely different scenarios than the 10kW racks that were predominant two years ago. We really need to approach that whole ecosystem differently, from the technology side to the human side.”

Whatever the new rack may look like, OpenAI plans to share its designs, Malone says. “I think it’s incumbent on us as an industry to kind of move this forward so that we can all benefit from these changes. I don’t think there’s tremendous value in keeping our infrastructure secret, like a secret rack form factor – we can leverage scale across the industry. I don’t want to be too precious about some of those solutions that would benefit if more people adopt it.”

Heyde adds: “This was sci-fi stuff five years ago, but not that far out now on what we’re talking about.”

Should his own sci-fi effort succeed, Heyde will have been partly responsible for bringing online the largest clusters of compute the world has ever seen, potentially supporting superhuman intelligences – or, at least, simulacra of intelligence.

“The mission is to create AI that solves the world’s hardest problems and that, to me, is a very exciting mission. Having spent many years of my life pursuing a PhD and expanding science, to be taking a real shot at making something that can solve the world’s hardest problems is pretty exciting,” he says.

We asked Heyde how he’d use such an AGI if he could put it on a core challenge with Stargate: “What’s hard for me to wrap my mind around is the sequencing of what to do to unlock the best approach. It’s not so much the sequencing from a demand and supply mapping perspective, but rather which capabilities and market signals I need to unlock to create the best goal state at different training time points.

“In some ways, you unlock something by doing an Abilene; in other ways, you unlock something by doing a self-build somewhere. In other ways, you unlock something by working with another partner. Those capabilities and market signals simulated out take you to different end states. Because of the variable space and the opportunity space, it’s kind of hard to conceive what the perfect global solution is, even though locally, I can see a lot of positive local solutions.”

More in The Data Center Construction Channel

Read the orginal article: https://www.datacenterdynamics.com/en/analysis/openai-building-stargate-nvidia-oracle-chatgpt/