Like lock and key, cooling and humidity come as a pair. Controlling the humidity of your racks is just as important as controlling the temperature. However, humidity is often the forgotten half of the equation.

Not too moist and not too dry, balancing the humidity can often be a challenge. Too much humidity can lead to the erosion and damage of key metal components across the white space. However, you still need some moisture to avoid the build-up of electrostatic discharge, capable of creating sparks that interrupt operations.

Finding the balance between the two is a challenge, says Ken Fulk, vice president of the American Society of Heating, Refrigerating, and Air Conditioning Engineers (ASHRAE).

ASHRAE now recommends a humidity range of between 20 and 80 percent relative humidity. It’s called relative humidity because the figure takes into account the outside conditions. Fulk explains there is “no magic number” and, in reality, most components nowadays can withstand humidity ranges of between 10 and 90 percent before experiencing any undesirable outcomes. Because of this, fixating on a number or a range is often unhelpful, he says.

Steve Skill, senior application engineer at Vertiv, adds that typically it is legacy data centers that are most sensitive to humidity fluctuations, as newer facilities are equipped with more resilient components, capable of withstanding higher and lower humidity levels.

Ultimately, Fulk says, “components don’t like change,” so controlling humidity is more about keeping it the same, rather than aiming for a specific number.

In 2008, ASHRAE issued new guidance, reducing the lower limit to 20 percent from the previously issued 40 percent. It also set guidance on dew point, another measure of humidity.

Outages caused by humidity issues are rare, but not unheard of. Last year, the University of Utah experienced widespread IT failures due to increased humidity outdoors causing increased humidity inside, triggering an outage.

Maurizio Frizziero, director of cooling innovation and strategy at Schneider Electric, explains the ideal dew point sits between 5°C (41°F) and 15°C (59°F). The dew point is the temperature at which any body of air can no longer hold water in gas form; essentially, the temperature at which condensation happens.

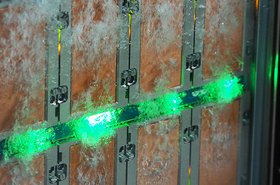

Vlad-Gabriel Anghel, director of solutions engineering at DCD’s training unit, DCD>Academy, says that dew point has become a popular choice for monitoring humidity as it monitors each rack with an individual sensor. He explains that the temperature and humidity can vary across each rack in a data hall, depending on how close they are to the cooling or humidity system. Dew point allows operators to monitor the humidity levels of racks on an individual basis using monitors attached to each rack.

Getting hot and steamy over time

Fulk has been designing and deploying data center cooling systems for decades. When he first began, data center facilities had a fixed density with “heat being produced on a square foot basis.” Conventional air conditioning was more than enough to distribute the air and maintain optimal humidity levels. Since then, and more crucially since the surge in AI and cloud computing workloads, maintaining humidity is not as easy as installing one “clamshell” arrangement, says Fulk.

“You can no longer have nice rows of equipment and perfectly arranged computer rooms with air conditioning units,” he explains. Selecting the ideal humidity range for a facility is complicated by the fact that different components have different parameters.

ASHRAE has set classifications for data center components: A1, A2, A3, and A4. DCD>Academy’s Anghel explains that these classifications effectively measure how sensitive a data center component is to humidity and temperature changes. For example, A1, the strictest classification, states that equipment in that category can only operate between 15°C (59°F) and 32°C (89.6°F) at between 20 to 80 percent relative humidity. It is in A1 that you will find the majority of servers and the most critical infrastructure.

Anghel says the difficulty is selecting the right humidity and temperature that satisfies all components of your data center ecosystem. Between all the classifications of all the equipment, there is likely an overlap, or in other words ‘the sweet spot.’ Setups have to be thought of as an entire ecosystem, with considerations as to how those parts interact with each other.

The most important consideration is the cooling method. Fulk says liquid or direct-to-chip cooling typically “lessens the impact of humidity” because the chip is essentially surrounded by moisture anyway, proving less of a challenge to keep consistent. Even still, Fulk adds that an operator has to ensure that humidity is low enough to prevent condensation based on the temperature of the liquid being circulated in the system.

Frizziero agrees that liquid cooling systems require far less precise control of relative humidity. However, Fulk cautions that the building could easily grow mold in high humidity even if the servers remain unharmed.

Skill adds: "Certain chemicals in the atmosphere of a site can react with the moisture in the air to form acids.” Chlorides and sulfides in coal-burning countries like China and India can produce acids that could easily corrode components. So, keeping humidity low is still important.

Previously, data centers also had to consider the people working inside them, says Skill. High humidities made for intolerable working conditions. He says nowadays, facilities have fewer people working inside them, so this has become less of a consideration when selecting the optimum humidity.

Free cooling can be costly cooling

Free cooling can pose a challenge for maintaining consistent humidity, but only in terms of energy, Fulk says. For example, when operating in a humid location like Dallas, the air being brought into the facility must be ‘dried’ before it hits the servers, and this requires more energy.

Outside air is typically dehumidified by bringing the surface of the heat exchanger to a temperature lower than the set dew point, explains Frizziero. The air passing through leaves with a fraction of the water as when it entered.

Fulk says somewhere like Phoenix might be warmer than Dallas, but is far less humid, thereby costing the operator far less in terms of energy. The opposite can be said for the Nordics. Granted, they have become a popular location for their cooler temperatures, but that is not to say the air will be dehumidified enough.

However, even if the air does need to be humidified or dehumidified, it will still require considerably less energy than “putting in refrigerants and glycol in a closed loop system,” says Anghel. Plus, it will be more cost-effective in comparison to an operator using liquid cooling in the same location.

It is more likely that air would need to be dehumidified than humidified, generally speaking, says Skill. In these cases, operators would typically install a dehumidification plant separately from the facility to treat the air before it enters inside. Conapto is an example of a Nordic operator using a separate plant to treat air before it goes inside the facility.

“Every operator will be thinking about sustainability,” says Fulk, because cooling, temperature, and humidity control all require energy. When designing a facility, great consideration will go into making that facility as energy-efficient as possible.

“Specifically with AI, more so than cloud computing, it is important for cooling systems to be as efficient as possible to reduce the impact on our environment,” he says. Operators should be picking locations strategically to avoid the added expense and added environmental burden of dehumidifying and humidifying air.

AI might be the buzzword today, but it won’t be the only thing raising densities. There will soon be something else that changes compute as we know it, adjusting ASHRAE’s recommendations and the relevance of humidity. Until then, the best an operator can do is to stay consistent.

Read the orginal article: https://www.datacenterdynamics.com/en/analysis/consistency-is-key-the-importance-of-data-center-humidity/