Worldcoin, a crypto biometrics project cofounded by OpenAI’s Sam Altman and backed by big-name VCs such as a16z and Tiger Global, is at loggerheads with European data protection regulators, Sifted has learnt.

The Worldcoin mission is an eye catching one: to go around the world scanning people’s eyeballs and faces with shiny chrome sphere devices known as ‘Orbs’, to give people a ‘WorldID’, a certificate verifying an individual as a unique human being. Participants will also be given the project’s cryptocurrency, WLD, in exchange for taking part.

The project is managed by a company called Tools for Humanity, which is headquartered in San Francisco, but has its European headquarters and manufacturing facility in the German state of Bavaria.

Bavaria’s data protection authority (BayLDA) is now entering the final weeks of a two-year long audit of Tools for Humanity.

The sticking point: how its iris capturing devices comply with the EU’s General Data Protection Regulation (GDPR).

The outcome, which is due to be presented in mid-September, will be a critical juncture in Worldcoin’s mission to create a global digital identity system, says BayLDA president Michael Will in an interview with Sifted.

“Once we, as the lead authority, say it doesn’t work, it won’t work anywhere in Europe,” says Will. “This is binding in every EU country so that’s why many will look at our assessment.”

According to its website, Tools for Humanity currently operates in 13 countries — including Germany and Austria — but it’s faced regulatory challenges elsewhere, particularly in Europe.

However, the company insists its technology anonymises data in such a way that it shouldn’t fall under GDPR rules.

“Our goal is to know nothing about the person,” Tools for Humanity chief digital privacy officer Damien Kieran tells Sifted. “Our commitment is to make this secure, to anonymise users, to not collect data.”

AI, crypto and biometrics

Founded in 2019, Tools for Humanity is the brainchild of Altman, fintech entrepreneur Max Novendstern and Alex Blania, a former California Institute of Technology masters student who took helm of the company after Novendstern left in 2020.

The company’s goal is to create a digital identification system that proves one’s humanity, as advances in AI, deepfakes and synthetic media obscure the differences between man and machine, explains Kieran.

A 2022 report by the European Union’s law enforcement agency Europol suggested that as much as 90% of the internet’s content could be synthetically generated by 2026.

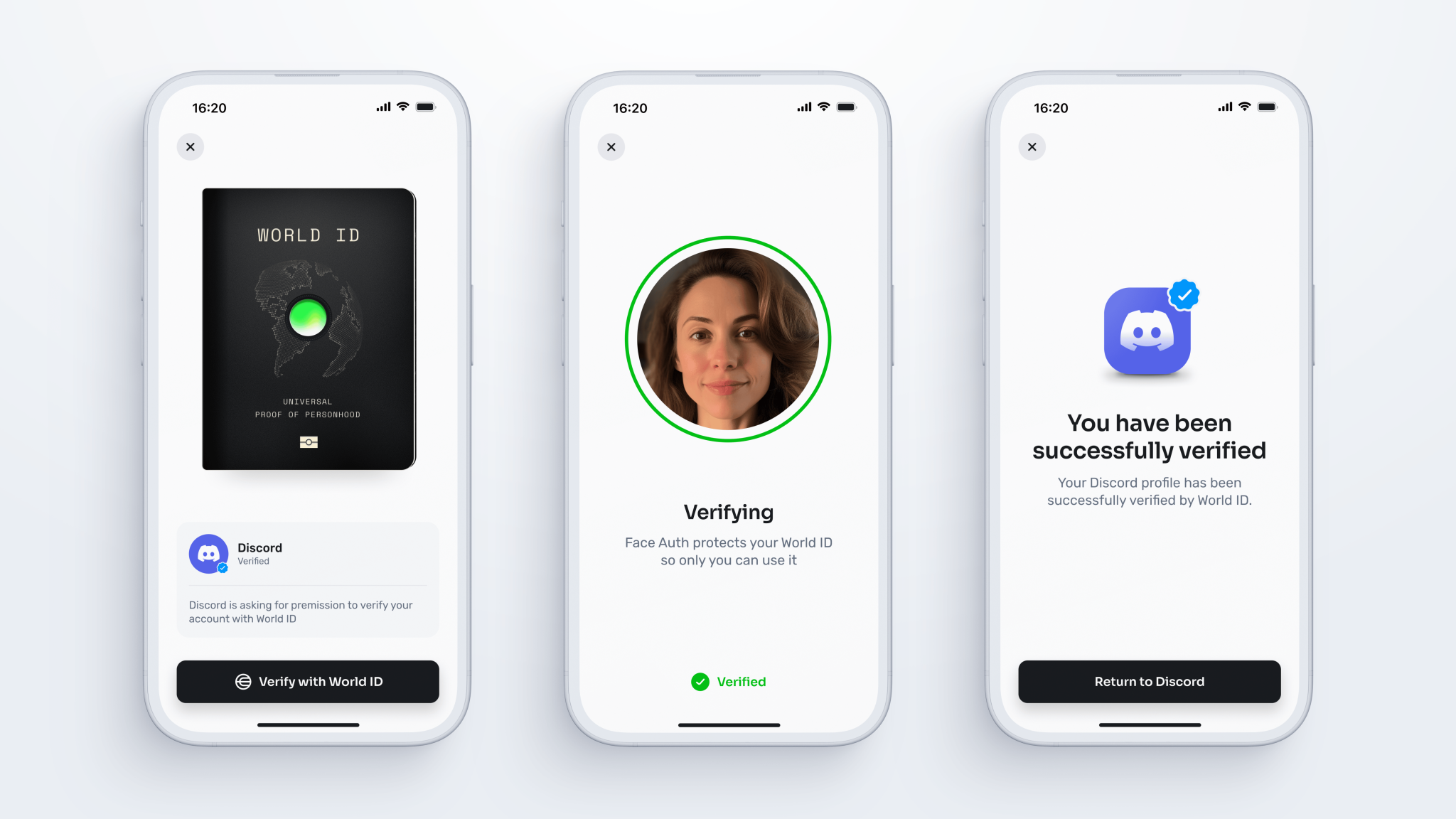

Once someone has a WorldID, the idea is that they’ll be able to log into websites such as Discord or Shopify with an ID that proves they’re flesh and blood and not an AI bot.

The company’s cryptocurrency WLD is currently valued at $1.61 according to crypto price comparison website CoinmarketCap — WorldID holders are given the opportunity to claim 60 WLD tokens over the course of 12 months.

Regulatory headwinds

Those lofty ambitions may have caught the attention of investors — it’s so far raised $250m — along with the over 6.5m people signed up to the app, according to its website.

Regulators, however, haven’t been quite as hospitable since the project launched in July last year.

In Hong Kong, watchdogs ordered Worldcoin to cease operations in May this year, citing privacy concerns. Earlier this month, the Kenyan government ordered the project to stop signing up new users.

Authorities in Spain and Portugal suspended the project earlier this year over concerns about the processing of sensitive data after receiving hundreds of complaints that it had scanned children’s eyeballs, Will at BayLDA says.

“[There was] a situation, especially in Portugal and Spain, where the registration procedure was weak,” he says. “Many non-adults were driven to make the registration on a cell phone and share their iris data with the Orb — this went rather wrong.”

And after the Orbs made their debut in London last July, the UK Information Commission announced it was making inquiries into the project, telling crypto publication CoinDesk that organisations “need to have a clear lawful basis to process personal data.” The Orbs are not currently operating in the UK market.

Tools For Humanity’s Kieran maintains that, while it met industry standards for age verification at launch, the company has since implemented more stringent controls. A third party now checks IDs to confirm an individual is over 18 years old before they’re allowed entry to an Orb facility.

There are further plans to update the process by enabling the Orbs to run an age verification model that estimates a user’s age.

“We’re new to operating these locations so we’re learning as we go too, right,” says Kieran. “That’s not saying it’s okay. It’s saying we have to get better and we have to improve.”

Invasion of privacy?

But age verification complaints could be a problem that’s easier to fix than the ongoing BayLDA investigation.

The Bavarian agency is looking more broadly at how the startup stores and processes personal data — and how its tech complies with GDPR. The main three issues include the legal basis for processing such sensitive data, the subject’s rights over that data (eg. in a request for deletion) and how securely that data is stored.

“There are some more detailed questions: what could, in a case of internal misuse, Worldcoin do with the data? Could there be an insider that does something wrong?,” Will says. “That’s also something that needs to be kept in mind for data protection.”

Tools for Humanity’s Kieran claims that the company doesn’t itself store any personal data collected by the Orb. Instead, the photo of the iris is translated into a unique code to be encrypted. It’s then chopped up into pieces to be stored in independent databases that aren’t owned or operated by the company.

The only person able to restore the code to its original order is the user through an encrypted private key stored on their phone (one caveat to this is that Worldcoin will enable some users to opt into sharing their data for training purposes).

Tools of Humanity is also at odds with the regulator over whether the anonymised data it stores comes under the right for deletion stipulated by GDPR.

“Under GDPR, we believe that, because it’s anonymised, it doesn’t have to be deleted,” says Kieran.

“This is a really important point… with all due respect to the DPAs (data protection authorities), they’re applying a law that was crafted 15 years ago,” he continues. “At this point the technology has moved on — we’re using some of the most advanced privacy enhancing technologies.”

All eyes on Worldcoin

Whatever decision BayLDA takes next month, it will be valid and applicable across the EU. It is also likely to impact Tools For Humanity’s relationships with regulators across the world.

BayLDA’s Will says that he’s been in discussions with regulators in Ghana, Argentina and the UK since the beginning of its Worldcoin assessment.

“I would expect that my British colleagues would look intensively on our decision, that they take it into account,” he says.

If BayLDA does rule against the company, it will be able to appeal the decision.

For now, however, Worldcoin continues to push ahead with its mission to capture every eyeball in the world. Last month, it launched its WorldID orb verifications in Austria — and it plans to get to work in more of Europe soon.

“Our goal over the coming months is to more strategically roll out in the EU and across APAC,” says Kieran.

Read the orginal article: https://sifted.eu/articles/worldcoin-regulation/