While the pace of AI development and adoption has been swift, trust is lagging behind.

A recent study from KPMG that surveyed 48k people across 47 countries found that only 46% of people are willing to trust AI systems, while 70% believe regulation is needed.

Without guidance around quality and assurance, the full potential of AI is unlikely to be realised.

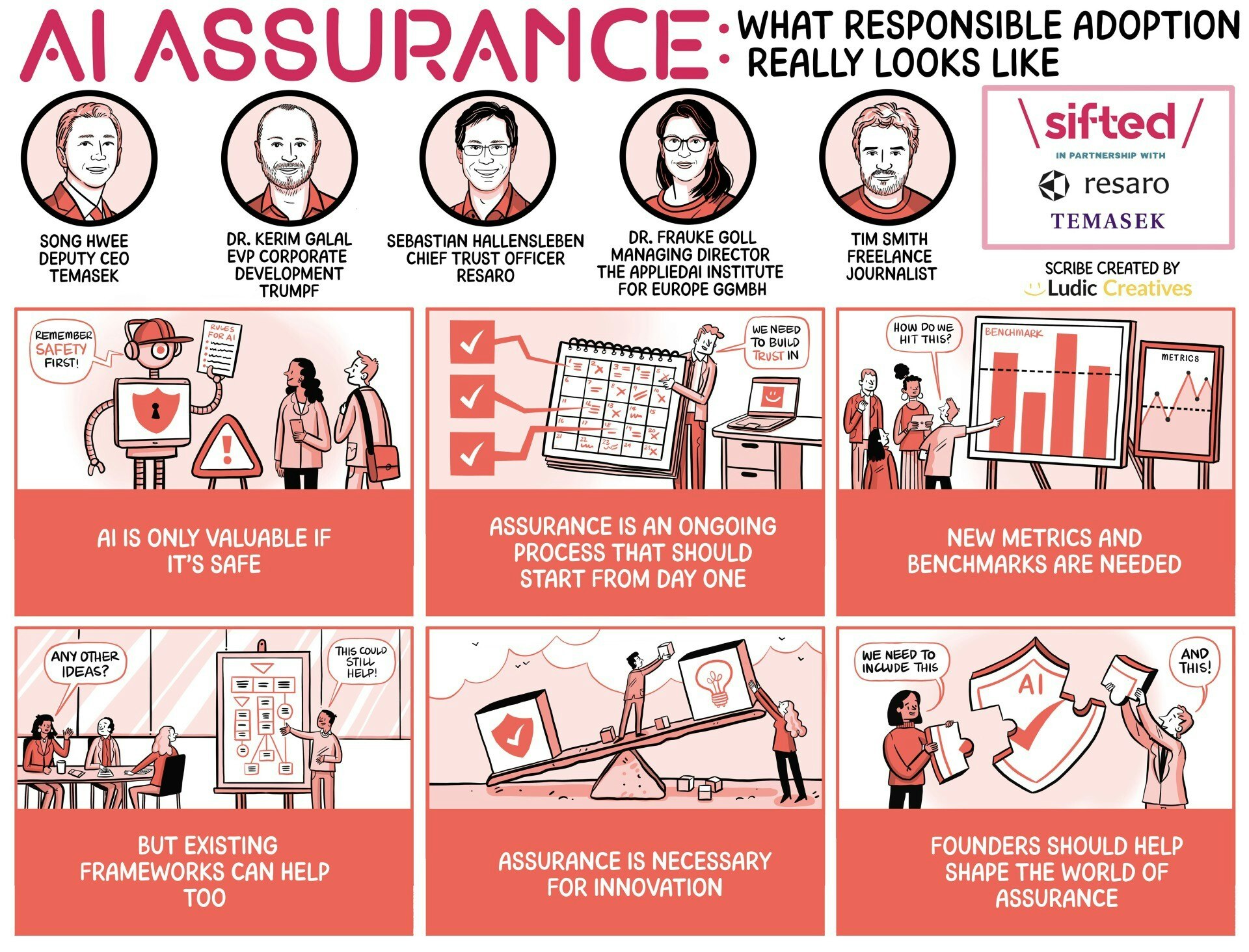

To delve deeper into how startups can find practical pathways for AI assurance in an era of rapid adoption, Sifted sat down with an expert panel.

We discussed the most effective ways to measure and communicate trust in AI systems, how existing frameworks can help validate new AI systems and how the technology poses new questions for mitigating risk.

Participating in the discussion were:

- Song Hwee Chia, deputy CEO of Temasek, a global investment firm headquartered in Singapore.

- Sebastian Hallensleben, chief trust officer at AI test lab Resaro.

- Dr Kerim Galal, executive vice president at corporate development across strategy, AI, M&A and in-house consulting at German machine tools and laser tech company Trumpf.

- Dr Frauke Goll, managing director at the AppliedAI Institute for Europe GmbH.

Here are the key takeaways:

1/ AI is only valuable if it’s safe

When Air Canada’s customer service chatbot incorrectly offered a discount to a traveller, the airline claimed it wasn’t responsible for the chatbot’s actions. A civil court disagreed.

Sebastian Hallensleben, from independent, third-party AI assurance provider Resaro, said this type of case highlights the need for all businesses which use AI to have the appropriate safeguards in place.

That’s particularly true in sectors such as finance, where trust and compliance are paramount, Frauke Goll added.

“AI has been used for a long time in fraud detection systems, credit scoring and loan approvals in banking,” she said. “Model validation and internal risk management are essential to build trust around AI-driven decisions and prevent algorithmic bias.”

For investors like Temasek (which has backed the likes of Adyen and Stripe), assurance is becoming a key consideration at the due diligence phase.

“We put [AI] in the same category as cyber security and sustainability – it has a direct impact on our reputation and the trust of our stakeholders.” — Song Hwee Chia, Temasek

2/ Assurance is an ongoing process that should start from day one

For Germany-based, Trumpf, which develops specialised technology for the manufacturing industry, the approach has been to involve the governance team with each project from day one.

Initially, the company offered AI as an option to clients. Now, AI systems are being applied internally to processes, predicting maintenance needs and monitoring machinery remotely, with staff also encouraged to experiment with AI productivity tools (within stated parameters).

“It’s mission critical we pay a lot of attention to governance to ensure a high level of AI trustworthiness,” Galal said, adding AI assurance is almost more important than cybersecurity:

“With AI, it’s not just about blocking systems, it’s about influencing systems and affecting individual safety. The attention on AI assurance is way higher, and that’s a good thing.” — Kerim Galal, Trumpf

3/ New metrics and benchmarks are needed

What’s needed is the development of more frameworks and metrics so that companies can better measure what good AI looks like, Song Hwee Chia said.

Because of this, Temasek is working on a risk assessment framework to evaluate various dimensions of AI adoption—including whether companies are using AI in a responsible and safe manner.

A lack of benchmarks and metrics is causing issues for customers too, Hallensleben said.

Resaro is working with the Singapore government, for example, on a project to help establish benchmarks on the ability of AI tools to spot deep fakes.

“We would never expect to be sold a car, for example, just because the manufacturer says ‘it’s great, it’s safe, it conforms to regulation.’ But in AI that’s exactly where we are right now.” — Sebastian Hallensleben, Resaro

4/ But existing frameworks can help too

However, Hallensleben also believes existing standards should not be overlooked.

“There’s sometimes this misconception that just because something contains AI, everything changes,” he said. “But ultimately, if you’re a factory worker working next to a robot, you want that robot to help you rather than harm you, whether it’s controlled by AI or not. The traditional notions of safety still apply.”

Organisations which are unsure about what solution to use should take inspiration from the way they’ve always evaluated new technology proposals.

“If you put yourselves in a procurement department, they’re very used to putting out requests for quotations asking for proposals for solutions with certain features and capabilities,” said Hallensleben. “Those proposals come back, you compare them and eventually you decide which one to buy. This is exactly the same process for AI, but companies need the ability to compare the proposals that come back.”

“There is a lot in the testing and the certification of non-AI products and solutions that we can learn from,” — Hallensleben

5/ Assurance is necessary for innovation

The difficulty with determining metrics for good AI, is ‘good’ depends on who you ask — the business teams, governance teams or technical teams. To tackle this, the teams need a “shared language” to build a shared understanding of required quality levels in various dimensions. This is essential for innovation-friendly, tight feedback cycles and thus an integral part of assurance.

Most of the time, there are trade-offs involved and more assistance is needed to help organisations navigate them. At the AppliedAI Institute for Europe GmbH, which generates and communicates high-quality knowledge about trustworthy AI, the team has been developing guidance, training and resources for SMEs looking to innovate with AI.

“Companies really need support when it comes to implementing assurance systems for AI,” Dr Goll said. “They need to be able to do a good risk assessment for themselves in whatever AI system they want to use.”

“It’s really important we find a good balance between what is really necessary, what is good enough, and what we need to regulate.” — Frauke Goll, AppliedAI Institute for Europe GmbH

6/ Founders should help shape the world of assurance

Regulation around AI will continue to evolve, but Hallensleben recommends founders get involved in the shaping of this new world.

“Don’t just wait for regulations and standards to come to you, take an active role in shaping them and making sure that they are practical,” he said.

The hype around the development of Agentic AI — which Gartner predicts will autonomously resolve 80% of customer service issues by 2029 — highlights the need to get this right soon, Chia added.

“With AI, we have the opportunity to do it right from the start. You can’t add AI assurance, safety and fairness after the fact. This must be done as part of your system, product, services, and design.” — Chia

Like this and want more? Watch the full Sifted Talks here:

Read the orginal article: https://sifted.eu/articles/regulation-ai-adoption-brnd/